The feedback blind spot: surveys are only telling you part of the customer story

Your feedback programme probably has a hole in it, and your survey data cannot show you where it is. Most CX teams rely on surveys (NPS, CSAT, CES) to measure customer experience. But surveys only capture what people say when asked. Public reviews on Google and Trustpilot capture what people say when they choose to speak.

These two sources often tell different stories, and the gap between them is where customer problems hide.

Before we dive in to the Feedback Blind Spot, here’s what you’ll learn in this post:

- Why survey scores and public review ratings often contradict each other, and what that gap means

- How consumer trust in reviews has shifted dramatically since 2020, and why that matters for your feedback programme

- The commercial impact of public ratings on revenue, with specific data

- Why multi-location businesses face a compounding version of this problem

- How to move from “monitoring reviews” to treating them as a genuine feedback channel

- The structural differences between survey feedback and review feedback, and why you need both

The gap you are probably not measuring

You run a solid CX feedback programme. CSAT surveys go out after every interaction. NPS runs quarterly. Response rates are respectable. The dashboard looks green.

Then someone on the team checks Google. A thread of 2-star reviews, all from the past month, all complaining about the same thing. Your surveys never flagged it.

This is not a rare scenario. It is the default state for most CX teams.

Surveys and public reviews are two different lenses on customer experience. Surveys show you what customers say when you ask. Reviews show you what customers say when nobody asks but they feel compelled to speak anyway. These are often different people, at different moments, saying different things.

The space between those two pictures is what we call the feedback blind spot. And for most organisations, it is the single biggest gap in their understanding of customer experience.

Why surveys and reviews tell different stories

The difference is structural, not accidental.

Surveys are controlled. You choose the timing, the questions, and the audience. That control is powerful for measurement. It gives you benchmarks, trends, and standardised data you can track over time.

But control introduces bias. Surveys only capture people willing to respond. Typical response rates for email surveys sit between 5% and 30%, depending on the industry. You are hearing from a self-selecting minority. The customers who had a terrible experience and simply left? They are not in your data.

Reviews are uncontrolled. Customers leave them on their own terms, in their own words, at their own pace. Research from the BrightLocal Local Cosnumer Review Survey 2025 shows that 81% of consumers are most likely to leave reviews when a business goes above and beyond, or falls significantly short. Reviews skew toward strong opinions, both positive and negative.

That skew is a feature, not a bug. Reviews capture the extremes that surveys often miss: the experiences so good or so bad that someone took the time to write about them publicly. And those extremes are where the real operational signals live.

Here is what makes this tricky: according to Capital One Shopping Research, 96% of customers specifically seek out negative reviews. They are not just reading your average rating. They are looking for the worst experiences to judge how you handle problems. Your response to a bad review is often more influential than the review itself.

The numbers that should worry CX leaders in 2026

The commercial impact of public reviews is not theoretical. It is measurable.

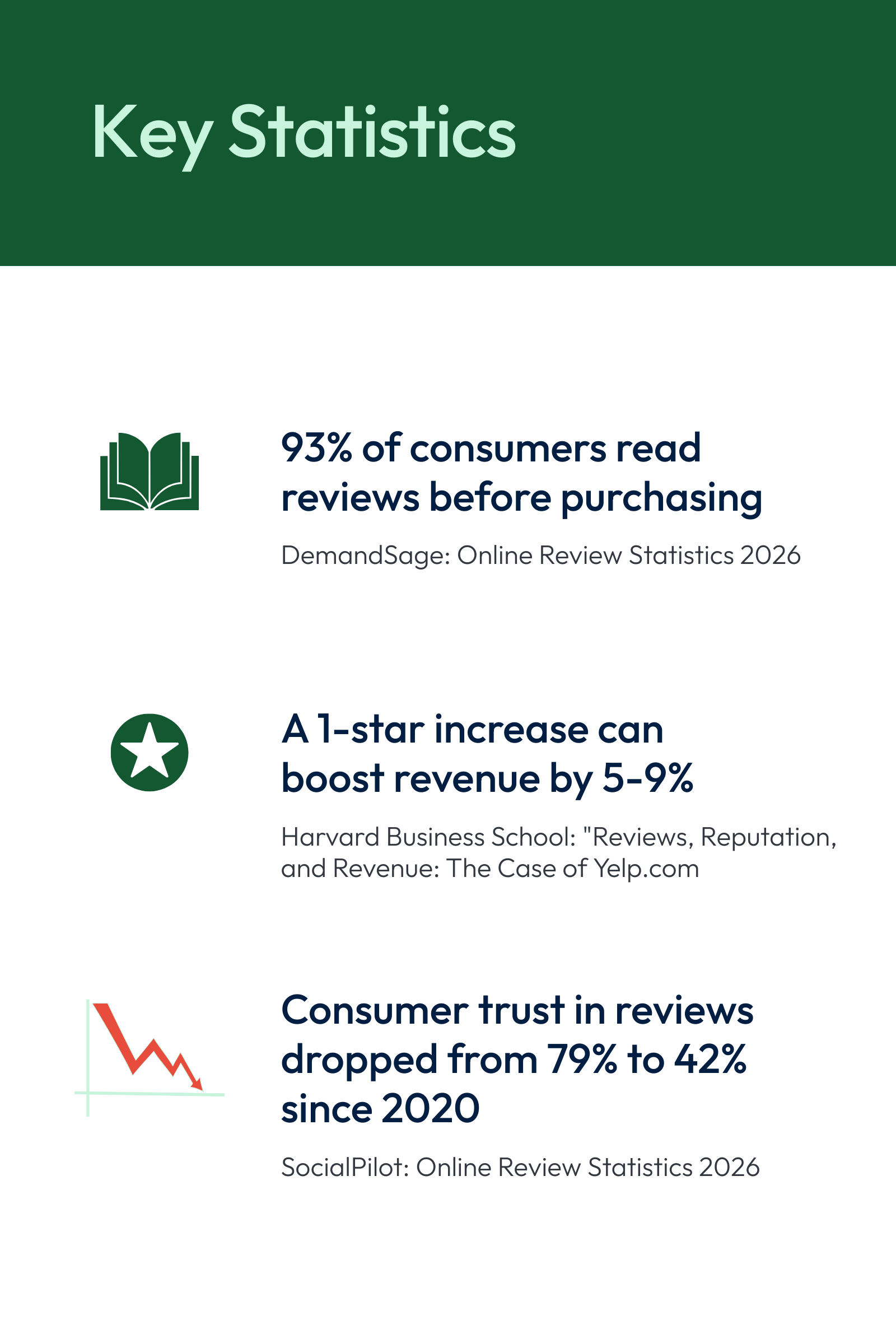

Reviews directly affect revenue. A one-star increase on a business profile can boost revenue by 5 to 9%, according to research from Harvard Business School. Businesses with ratings of 4 stars or higher generate 32% more revenue than those with lower ratings. And businesses that respond to at least 25% of their reviews earn 35% more than those that do not respond at all.

Those are the industry averages. But how does this apply to your organisation?

Use the calculator below to estimate your blind spot.

Trust in reviews is shifting, but they still drive decisions. According to Social Pilot’s Online Review Statistics, consumer trust in online reviews has dropped significantly, from 79% who trusted reviews as much as personal recommendations in 2020 to roughly 42% in 2025. Part of this decline is driven by awareness of fake reviews: data from Capital One shows that 82% of consumers say they have read a fake review in the past year.

But declining trust has not reduced usage. DemandSage’s Online Review Statistics report found that 93% of consumers still read reviews before making a purchase decision. Capital One’s Shopping Research backs this up noting that 70% rarely visit an unfamiliar business without checking reviews first. And consumers spend an average of 13 minutes and 45 seconds reading reviews before deciding to trust a local business.

What has changed though is how consumers read reviews.

They are more sceptical.

They look for specificity and recency.

DemandSage reports that 83% of customers believe reviews only hold value if they are recent and relevant. And data from SocialPilot 88% of customers are more likely to use a business that responds to all of its reviews, positive and negative.

So now the market is responding accordingly. According to Verified Market Research’s report, the online reputation management software market was valued at $5.2 billion in 2024 and is projected to reach $14.02 billion by 2031, growing at a CAGR of 13.2%.

What does this mean? That organisations are investing because they have seen the cost of not paying attention to those online reviews.

What happens when you ignore the blind spot

For a single-location business, the consequences of ignoring reviews are manageable. You might miss a few complaints. You might be slow to respond to a negative review. The damage is limited.

For multi-location businesses, the blind spot compounds.

Twenty locations means twenty Google Business profiles and potentially twenty Trustpilot pages. Each location generates its own stream of reviews. A single location might receive 10 to 20 reviews per month. Scale that to 50 locations and you are looking at 500 to 1,000 reviews every month.

Without a systematic approach, three things happen:

Patterns go undetected. One complaint about wait times at a single location is an anecdote. The same complaint appearing across five locations is a systemic issue. But if nobody is looking at all five locations together, the pattern stays invisible.

Responses become inconsistent or nonexistent. Each location may handle reviews differently. Some respond promptly. Others ignore reviews entirely. The result is an inconsistent brand experience that confuses customers and weakens trust. BrightLocal Local Consumer Review Survey 2025 tells us that 38% of customers expect businesses to respond to negative reviews within two to three days. When responses come weeks later, or not at all, the damage is already done.

Leadership flies blind. When someone senior asks “how are we doing in the North?”, the CX team scrambles to pull together spreadsheets from multiple Google accounts. There is no single source of truth that connects public perception with internal survey data.

How the best CX teams close the gap

Closing the feedback blind spot is not about adding more tools. It is about changing how you think about reviews.

Treat reviews as a feedback channel, not a marketing concern. Most organisations treat review monitoring as a comms or marketing responsibility. The team reads reviews, responds to the bad ones, and moves on. But reviews contain the same kind of insight as surveys: themes, sentiment, recurring issues, location-level patterns. They belong in the CX programme, not the PR inbox.

Analyse reviews alongside surveys, not separately. When you can see both sources together, discrepancies become visible. If your CSAT for a location is 85% but the Google rating is 3.1, that gap deserves investigation. Maybe your survey is reaching the wrong customers, or catching them at the wrong moment, or your satisfied customers simply are not leaving public reviews.

Use review data to validate and challenge survey findings. If survey comments mention long wait times and public reviews say the same thing, the issue is confirmed. If only one source mentions it, the problem might be limited to a specific segment. Reviews act as a reality check on your controlled data.

Make review insights available to the people who can act on them. Location managers should see reviews for their location. Regional leads should see trends across their region. Head office should see the full picture. You need the right data forwarded to the right level.

SmartSurvey’s Reputation Managemet brings Google and Trustpilot reviews into the same platform where you manage surveys. AI-powered entiment and thematic analysis runs across every review. You can spot patterns, compare locations, respond directly, and track whether your improvements are showing up in your ratings over time.

But the tool is secondary. The mindset shift comes first. Start thinking of your public reputation as feedback that happens to be visible, not as a separate problem to manage.

Common questions about integrating reviews into your feedback programme

How is review data different from survey data?

Surveys give you structured, standardised data you control. Reviews give you unstructured, public data you do not control. Surveys are better for benchmarking and tracking specific metrics over time. Reviews are better for surfacing strong opinions, catching issues surveys miss, and understanding public perception.

Should we respond to every review?

Yes - you should aim to respond to every review and remain proactive in listening to your customers. Prioritise negative reviews with specific complaints, mixed reviews where a good response could change perception, and any review that asks a question or mentions an employee by name. Positive reviews deserve acknowledgement but do not need the same urgency.

How do we handle fake reviews?

Report them to the platform. Google and Trustpilot both have processes for flagging fake or spam reviews. Trustpilot removed 4.5 million fake reviews in 2024, with 90% detected automatically by AI.

What if our survey scores are good but our review ratings are bad?

That gap is the signal. It usually means one of three things: your survey is reaching a different population than your reviewers, your survey timing misses the moments that generate strong opinions, or you have a segment of unhappy customers who are not in your survey sample. Investigate the gap rather than dismissing it.

Do public review ratings actually affect B2B decisions? Yes. 56% of B2B software buyers consider how much users like a product when making purchasing decisions, and 21% say finding solid customer references is a major hurdle. Your public ratings are visible to everyone, including procurement teams.